# AI Recommendations API

Source: https://docs.dappier.com/ai_recommendations_api

Convert any data into a recommendation engine with Dappier's AI-powered Article Recommendations API. This API uses a RAG (Retrieval-Augmented Generation) model to return article recommendations based on the input query or URL.

You'll need an API key to access this API. Visit [Dappier Platform](https://platform.dappier.com) to sign up and create an API key under Settings > Profile > API Keys.

#### Using AI Recommendations API

This section demonstrates retrieving recommendations based on natural language queries or URLs. You can get the data model id of the desired data model from the [Dappier Marketplace](https://marketplace.dappier.com). The data model id starts with `dm_`.

Api reference for this endpoint can be found [here](https://docs.dappier.com/api-reference/endpoint/real-time-search).

```python Python

import requests

import json

url = "https://api.dappier.com/app/datamodel/dm_01j0pb465keqmatq9k83dthx34"

payload = json.dumps({

"query": "lifestyle new articles",

"similarity_top_k": 3,

"ref": "",

"num_articles_ref": 0,

"search_algorithm": "most_recent",

"page": 1,

"num_results" : 10

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer '

}

response = requests.post(url, headers=headers, data=payload)

print(response.text)

```

```bash Go

package main

import (

"bytes"

"fmt"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.dappier.com/app/datamodel/dm_01j0pb465keqmatq9k83dthx34"

apiKey := ""

query := `{

"query": "lifestyle new articles",

"similarity_top_k": 3,

"ref": "",

"num_articles_ref": 0,

"search_algorithm": "most_recent",

"page": 1,

"num_results" : 10

}`

req, err := http.NewRequest("POST", url, bytes.NewBuffer([]byte(query)))

if err != nil {

fmt.Println("Error creating request:", err)

return

}

req.Header.Set("Authorization", "Bearer "+apiKey)

req.Header.Set("Content-Type", "application/json")

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

fmt.Println("Error making request:", err)

return

}

defer resp.Body.Close()

body, _ := ioutil.ReadAll(resp.Body)

fmt.Println(string(body))

}

```

```bash cURL

curl --location --request POST 'https://api.dappier.com/app/datamodel/dm_01j0pb465keqmatq9k83dthx34' \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer ' \

--data-raw '{

"query": "lifestyle new articles",

"similarity_top_k": 3,

"ref": "",

"num_articles_ref": 0,

"search_algorithm" : "most_recent",

"page": 1,

"num_results" : 10

}'

```

```json response

{

"results": [

{

"author": "Rusty Weiss",

"image_url": "https://images.dappier.com/dm_01j0pb465keqmatq9k83dthx34/Olympics-Rugby-Sevens-Women-Semifinal-USA-vs-NZL-23861886-scaled-e1725556056406_.jpg?width=428&height=321",

"preview_content": "American rugby player Ilona Maher’s popularity is at its absolute peak. Demand for her has skyrocketed since her performance in […]

\nThe post Olympic Rugby Hero Ilona Maher Joins ‘Dancing With The Stars’ appeared first on Bounding Into Sports .

",

"pubdate": "Thu, 05 Sep 2024 17:30:27 +0000",

"site": "Bounding Into Sports",

"site_domain": "www.boundingintosports.com",

"title": "Olympic Rugby Hero Ilona Maher Joins ‘Dancing With The Stars’",

"url": "https://api.dappier.com/app/track/NB2HI4DTHIXS653XO4XGE33VNZSGS3THNFXHI33TOBXXE5DTFZRW63JPGIYDENBPGA4S633MPFWXA2LDFVZHKZ3CPEWWQZLSN4WWS3DPNZQS23LBNBSXELLKN5UW44ZNMRQW4Y3JNZTS253JORUC25DIMUWXG5DBOJZS6===?type=article_click&site_domain=www.boundingintosports.com&datamodel_id=dm_01j0pb465keqmatq9k83dthx34&request_id=3ac73c01974c-kqHLh38Cqy-2824988&origin="

},

...

]

}

```

# AI Recommendations API

Source: https://docs.dappier.com/api-reference/endpoint/ai-recommendations

post /app/v2/search

Retrieve articles based on queries or URLs, powered by semantic search.

# Real Time Search

Source: https://docs.dappier.com/api-reference/endpoint/real-time-search

post /app/aimodel/{ai_model_id}

Access real-time search with Dappier's AI models — from live web search and breaking news to stock prices and financial insights. Fast, reliable, and ready for monetization.

# Bot Deterrence

Source: https://docs.dappier.com/bot-deterrence

Bots are automated programs that perform tasks on the internet. While some bots, like search engine crawlers, are beneficial, others, like scrapers and spammers, can harm your site.

Bad bots can cause massive problems for web properties. Too much bot traffic can put a heavy load on web servers, slowing or denying service to legitimate users (DDoS attacks are an extreme version of this scenario). Bad bots can also scrape or download content from a website, steal user credentials, take over user accounts, rapidly post spam content, and perform various other kinds of attacks. Bot management is necessary to prevent these performance and security impacts on websites, applications, and APIs, by leveraging a range of security, machine learning, and web development technologies to accurately detect bots and block malicious activity while allowing legitimate bots to operate uninterrupted.

**What are the bot detection techniques?**

Some of the popular bot detection techniques include

* Browser fingerprinting – this refers to information that is gathered about a computing device for identification purposes (any browser will pass on specific data points to the connected website’s servers, such as your operating system, language, plugins, fonts, hardware, etc.)

* Browser consistency – checking the presence of specific features that should or should not be in a browser. This can be done by executing certain JavaScript requests.

* Behavioral inconsistencies – this involves behavioral analysis of nonlinear mouse movements, rapid button and mouse clicks, repetitive patterns, average page time, average requests per page, and similar bot behavior.

* CAPTCHA – a popular anti-bot measure, is a challenge-response type of test that often asks you to fill in correct codes or identify objects in pictures.

**Best Practices for Bot Deterrence**

* Create a robots.txt file for your website. A good starting point might be to provide crawling instructions for bots accessing your website's resources. See these examples of Google's robots.txt file.

* Implement CAPTCHAs: Use CAPTCHAs to distinguish between human users and bots.

* Rate Limiting: Set limits on the number of requests a user can make in a given timeframe.

* Regular Monitoring: Continuously monitor traffic and update your security settings to stay ahead of bot activity.

* Set up a web application firewall (WAF). WAFs can be used to filter out suspicious requests and block IP addresses based on various factors

* Use honeypot traps. Honeypots are specifically designed to attract unwanted or malicious bots, allowing websites to detect bots and ban their IP addresses.

**Cloudflare Bot Solutions**

Cloudflare Bot Solutions offer comprehensive protection against malicious bots that can disrupt your website's performance and security. By leveraging Cloudflare's global network and advanced machine learning algorithms, you can effectively detect and deter unwanted bot traffic, ensuring a seamless experience for your legitimate users.

Key Features:

1. Bot Fight Mode:

* Active Defense: Automatically detects and mitigates bot traffic by deploying challenge-response tests.

* Ease of Use: Simple to enable from the Cloudflare dashboard, providing immediate protection without complex configurations.

2. Bot Management:

* Machine Learning Algorithms: Uses sophisticated machine learning to differentiate between good and bad bots.

* Behavioral Analysis: Monitors traffic patterns to identify and block suspicious activities in real-time.

* Custom Rules: Allows you to create custom rules to address specific bot threats tailored to your business needs.

3. Threat Intelligence:

* Global Insights: Utilizes data from Cloudflare's extensive network to stay ahead of evolving bot threats.

* Updated Databases: Regularly updates bot databases to include new and emerging bot patterns, ensuring up-to-date protection.

4. Analytics and Reporting:

* Detailed Insights: Provides comprehensive analytics and reports on bot traffic, helping you understand the impact and adjust defenses accordingly.

* User-friendly Dashboard: Easy-to-navigate dashboard where you can monitor bot activity and manage your settings.

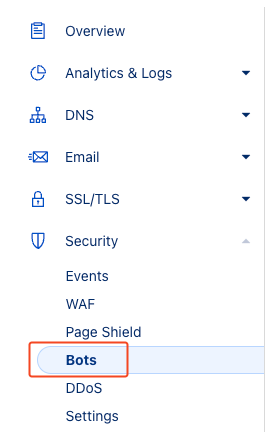

**How to Implement Cloudflare Bot Solutions**

* Sign Up and Add Your Site:

Create an account on Cloudflare and add your domain. Follow the prompts to configure your DNS settings.

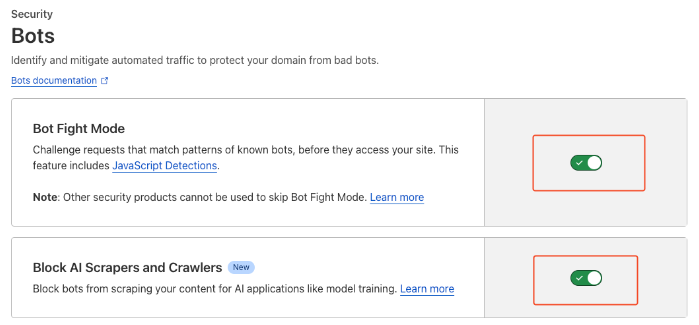

* Enable Bot Fight Mode: Navigate to the Firewall section in the Cloudflare dashboard and toggle on Bot Fight Mode. This feature will start protecting your site immediately.

Activate Bot Fight Mode and Block AI Scrapers and Crawlers if not enabled already

* Configure Bot Management:

Access the Bot Management section to set up advanced rules and customize your bot protection strategy. Use the provided analytics to monitor bot activity and adjust settings as needed.

* Monitor and Adjust:

Regularly check the Cloudflare dashboard for updates on bot traffic and make necessary adjustments to your bot management rules to keep your site protected against new threats.

The dashboard gives information about threats and bots identified by Cloudflare

**Bot detection using AWS WAF**

AWS WAF is a web application firewall that helps protect your web applications from common web exploits that could affect application availability, compromise security, or consume excessive resources. With AWS WAF, you can create rules to filter web traffic based on various conditions, including IP addresses, HTTP headers, URI strings, and the origin of the requests.

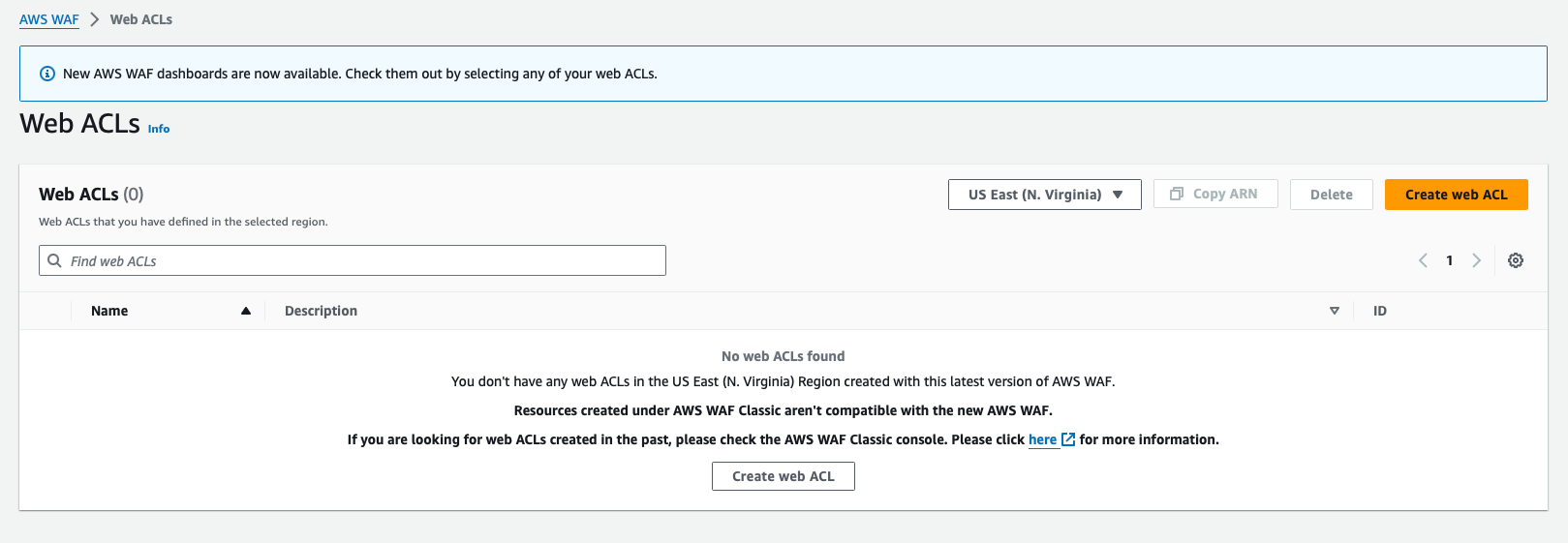

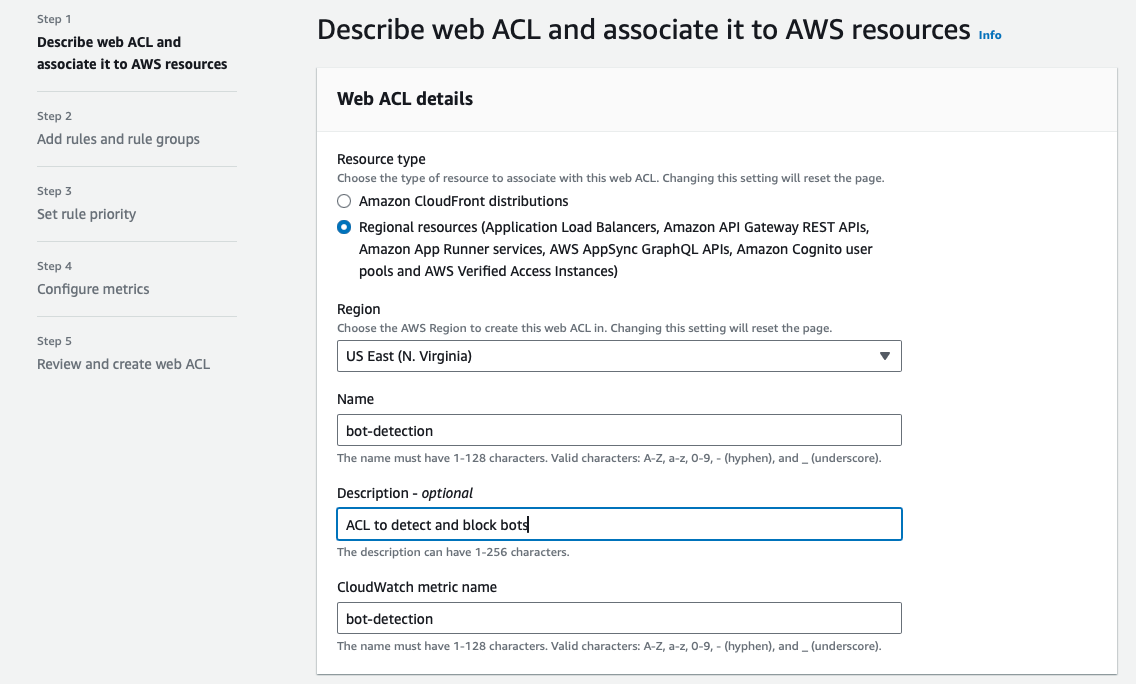

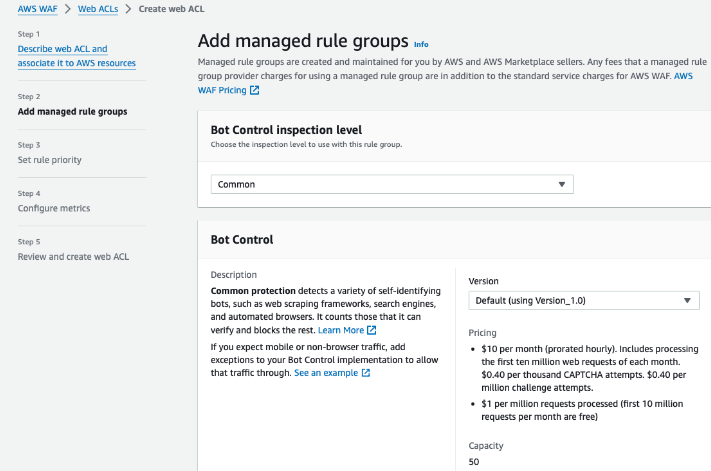

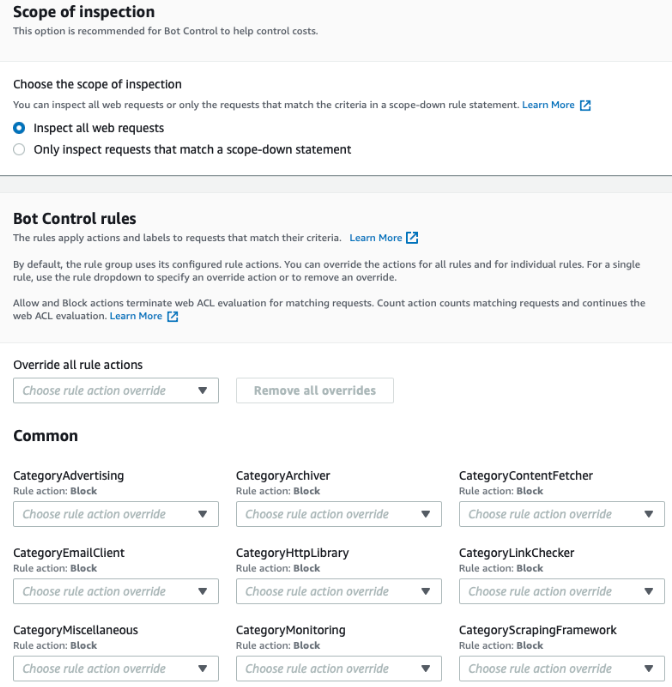

To begin using AWS WAF, follow these steps:

1. Create a Web ACL (Access Control List):

Navigate to the AWS WAF & Shield console.

Create a new Web ACL and associate it with your CloudFront distribution, API Gateway, or ALB (Application Load Balancer).

Add AWS resources that need to be monitored

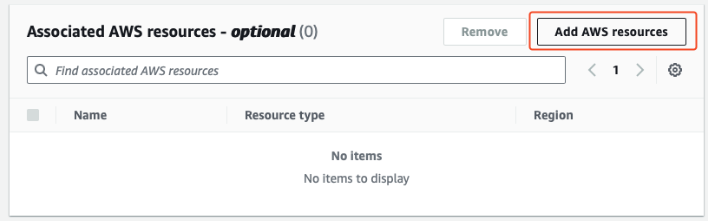

2. Add Rules to Detect Bots:

* User-Agent Filtering: Add a custom rule to inspect the User-Agent header. You can block requests with known bot User-Agent strings or allow only specific User-Agent strings.

* Rate-Based Rules: Use rate-based rules to limit the number of requests from a single IP address. This can help mitigate bots that generate high volumes of traffic.

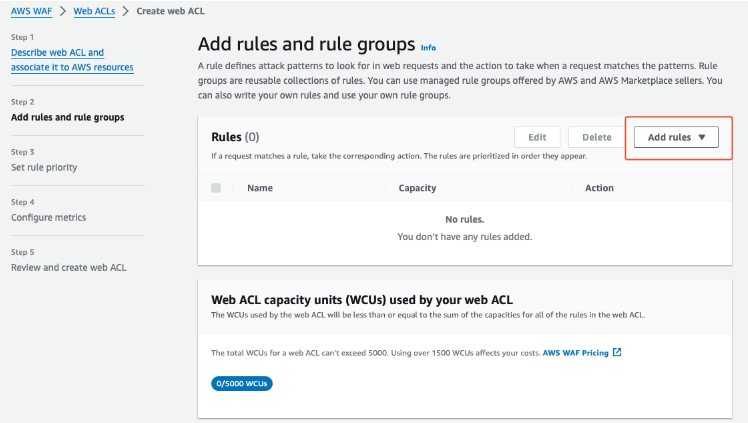

* AWS Managed Rules: Utilize AWS Managed Rules for bots and scraping detection. AWS offers a Bot Control rule group that specifically targets known bot traffic.

You can choose AWS Managed Rules to begin with and configure to block bots depending on configurations.

* Click on “Add to web ACL” and edit to add required configurations

* You can choose multiple categories based on your requirements to block the bots

* Once the rules are added, monitoring can be done using the bot control dashboard

**Boilerplate for robots.txt**

In the below example, currently known AI data scrapers and undocumented AI agents are blocked. You can use it as a starting point and manually customize it as needed.

```

User-agent: Applebot-Extended

Disallow: /

User-agent: Bytespider

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: Diffbot

Disallow: /

User-agent: FacebookBot

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: GPTBot

Disallow: /

User-agent: Meta-ExternalAgent

Disallow: /

User-agent: omgili

Disallow: /

User-agent: Timpibot

Disallow: /

User-agent: anthropic-ai

Disallow: /

User-agent: Claude-Web

Disallow: /

User-agent: cohere-ai

Disallow: /

```

You can validate robots.txt using [this](https://technicalseo.com/tools/robots-txt/) online service.

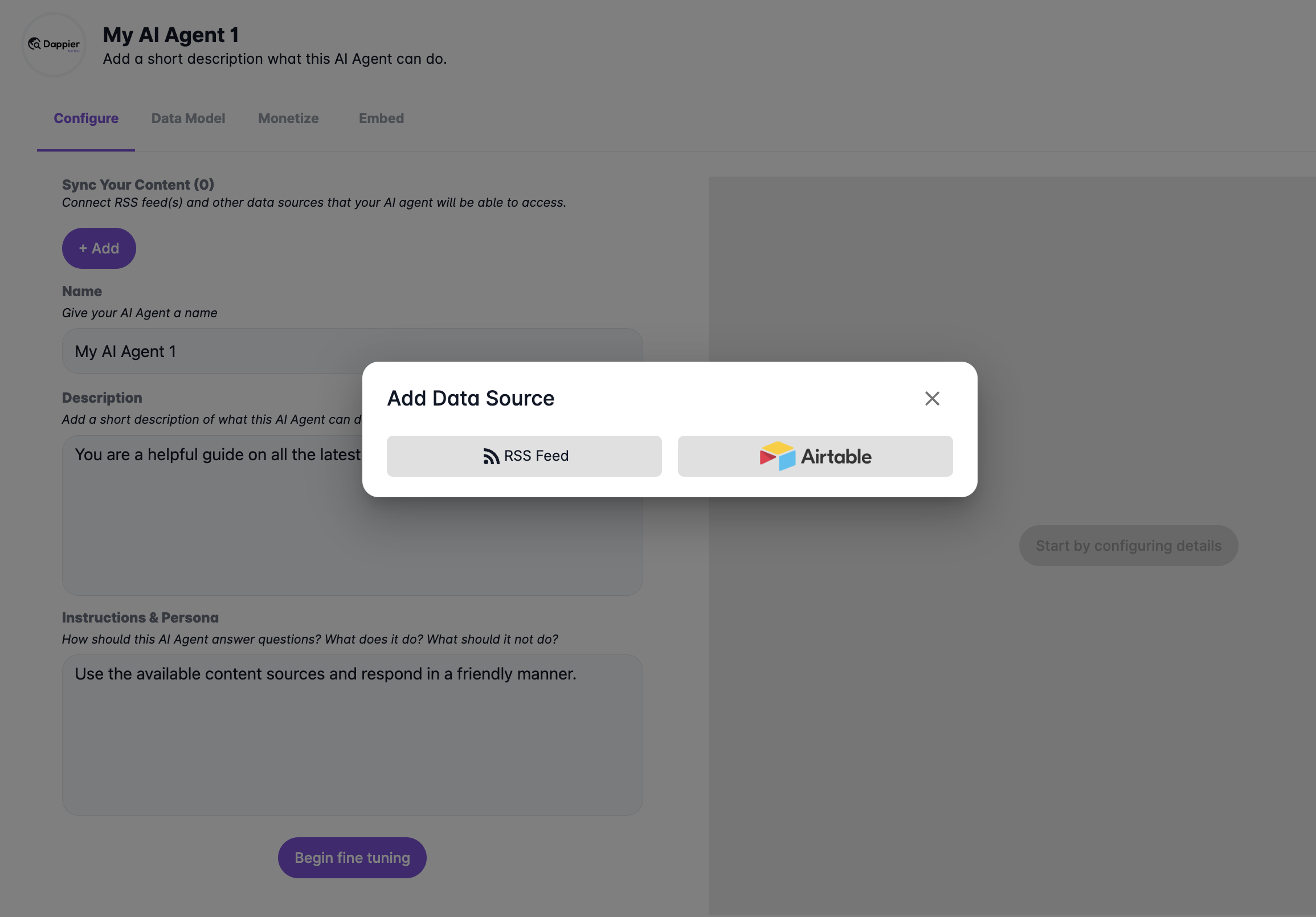

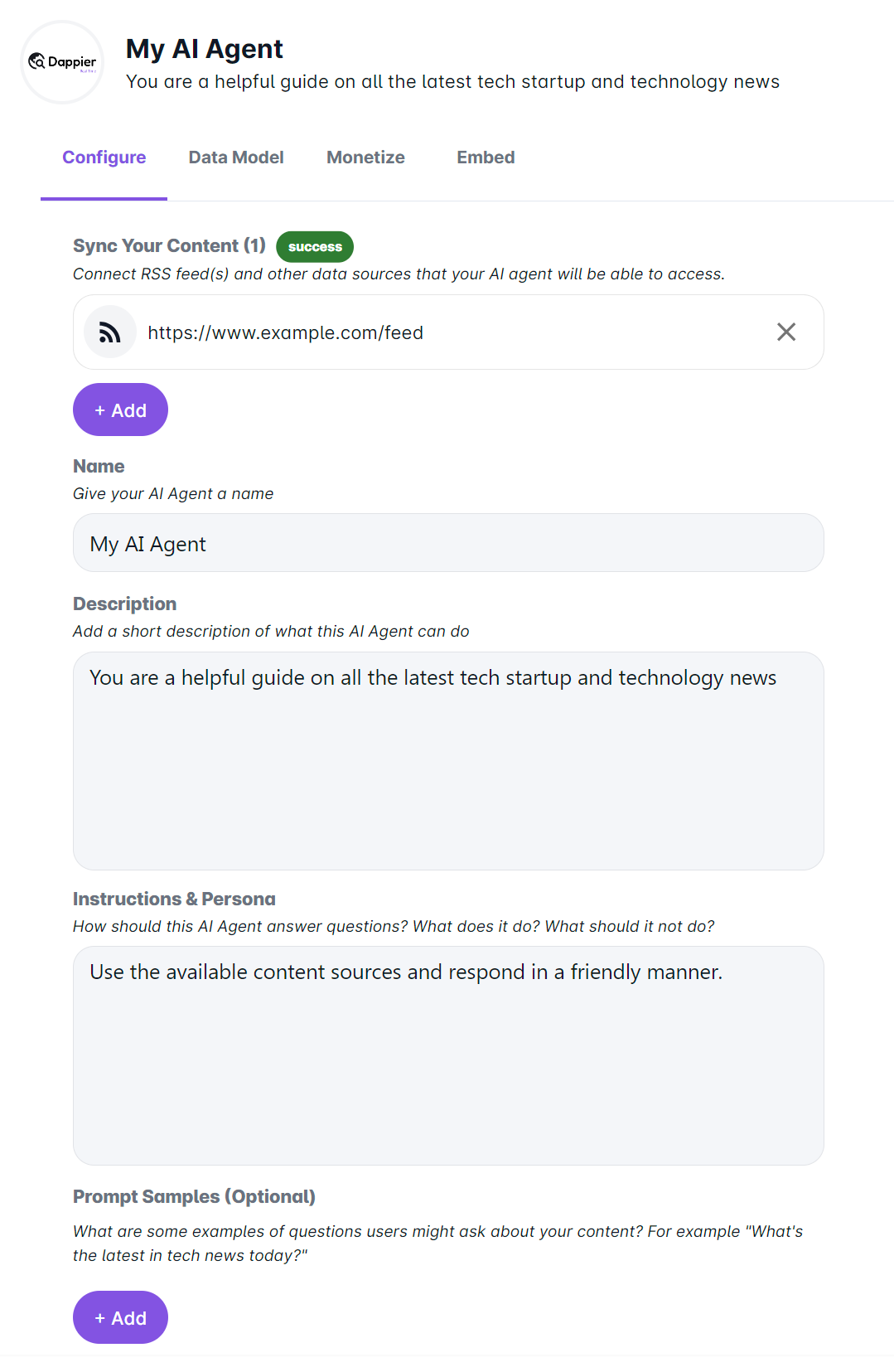

# Configure Your AI Agent

Source: https://docs.dappier.com/configure-ai-agent

Welcome to Dappier's Create AI Agent tool! With this feature, you can configure your AI Agent to suit your needs. To begin, navigate to [My AI Agents](https://platform.dappier.com/my-ai) and click on **Create AI Agent**.

With just a few steps you can fine tune your AI Agent. Here’s how:

### Sync Your Content

Get started with building your AI agent with knowledge sources such as RSS feeds or Airtable.

`, ` `, ``)

- Apply all styles via **inline CSS only**

- Ensure all links are clickable (``)

- Keep it responsive and compatible with major email clients (no JavaScript, no external assets)

- Don't include ```html in the output.

All the related information is below:

{{company_overview_response}}

{{financial_performance_response}}

{{peer_comparison_response}}

{{stock_snapshot_response}}

{{news_response}}

````

This action will generate a clean, richly structured report in semantic HTML format—ready to be delivered by email.

## 📤 Delivering the Report via Gmail

Now that the investment report is generated, let’s send it back to the original sender as a formatted HTML email using Gmail.

### Step 11: Send the Email

Add a **Gmail** action and choose `Send Email`.

Configure the email fields as follows:

* **To**:

```text

{{email_sender_address}}

```

Use the dynamic reference to send it back to the email originator:

```text

{{trigger['message']['from']['value'][0]['address']}}

```

* **Subject**:

```text

Stock Market Analysis of {{extracted_ticker}}

```

* **Body**:

```text

{{investment_report_html}}

```

* **Body Type**: `HTML`

* **CC/BCC/Reply-To**: Leave these empty unless needed.

* **Draft**: Set to `false` to send the email immediately.

Once configured, the flow will respond to every stock-related query with a professional-grade investment report, fully automated.

## 🌟 Highlights

This notebook has guided you through building a fully automated stock market analysis workflow using Activepieces, OpenAI, Dappier, and Gmail. You’ve seen how to classify queries, extract ticker symbols, fetch real-time financial data, generate detailed reports, and deliver them directly to email—all without writing a single line of backend code.

Key tools utilized in this notebook include:

* **Activepieces**: A powerful no-code automation platform that enables app-triggered workflows with conditional logic, AI actions, and app integrations.

* **OpenAI**: A leading provider of advanced AI models used here to classify stock queries, extract ticker symbols, and generate investment reports in rich HTML format.

* **Dappier**: A platform connecting LLMs and automation tools to real-time, rights-cleared data from trusted sources, specializing in domains like stock market, finance, and news. It delivers enriched, prompt-ready data, empowering automations with verified and up-to-date information.

* **Gmail**: Serves as both the input trigger and output channel, making the workflow seamlessly email-driven.

This comprehensive setup allows you to adapt and expand the example for various scenarios requiring stock research, financial insights, or real-time data–driven automation.

# ⚙️ Build Smarter Agent.ai Agents with Dappier’s Real-Time, Verified Data Models

Source: https://docs.dappier.com/cookbook/recipes/agent-ai-create-dappier-agent

[**Agent.ai**](http://Agent.ai) is a professional network and marketplace

for AI agents—and the people who love them. It allows users to discover,

connect with, and hire a variety of AI agents to perform useful tasks,

serving as a hub for agent-based collaborations and innovations.

[**Dappier**](https://dappier.com/developers/) is a platform that connects

LLMs and Agentic AI agents to real-time, rights-cleared data from trusted

sources, including web search, finance, and news. By providing enriched,

prompt-ready data, Dappier empowers AI with verified and up-to-date

information for a wide range of applications.

This guide provides a step-by-step process for extracting real-time

search data from Dappier RAG models into a new Agent on the Agent.ai

platform. Follow the instructions carefully to ensure a successful setup.

For the purpose of this guide, we are using Dappier’s Real Time Data RAG

model, available here: [https://marketplace.dappier.com/marketplace/real-time-search](https://marketplace.dappier.com/marketplace/real-time-search)

## Watch the Video Guide

If you prefer a visual walkthrough, check out the accompanying video guide below:

VIDEO

## Follow the Step-by-Step Tutorial

For detailed, written instructions, follow along with this

comprehensive guide to complete your setup:

### Accessing the Agent.ai Platform

* Open your preferred web browser and navigate to [agent.ai](http://agent.ai).

* Log in using your credentials, If you don’t have an account, click **Sign Up** and complete the registration process.

VIDEO

***

## Key Features

* **Weather-Optimized Itineraries:** Get personalized travel plans tailored to the real-time weather conditions of your destination.

* **Local News Updates:** Stay informed with today's local news and updates for your travel location.

* **Dynamic Scheduling:** Receive a detailed day-by-day schedule with activities, timings, and weather forecasts.

* **User-Friendly Interaction:** Simple prompts guide you through the planning process, making it easy to create your ideal trip.

***

## How to Use the Dynamic Travel Planner

Using the Dynamic Travel Planner is simple and intuitive. Follow the steps below to create your personalized travel itinerary:

1. **Start the Planner:**\

Initiate the Dynamic Travel Planner by providing your travel destination.

2. **Provide Trip Details:**

* Specify your travel start date.

* Indicate the duration of your trip (in days).

3. **Receive Your Itinerary:**\

The planner will generate a detailed, weather-optimized itinerary for your trip, including:

* A day-by-day schedule with activities and timings.

* Real-time weather forecasts for your destination.

* Today's local news updates to keep you informed.

***

## SmartFlow Actions

This agent is designed to provide users with a dynamic and personalized travel itinerary based on real-time data. Below are the SmartFlow actions configured for this agent:

### **1. Collect Destination Input**

* **Action Type:** Get user input (text)

* **Prompt:** "Where would you like to go?"

* **Variable:** `destination_city`

* **Functionality:** This step collects the user's desired travel destination and stores it in the variable `destination_city`.

### **2. Fetch Local News for Destination**

* **Action Type:** Make REST API call

* **Endpoint:** `https://api.dappier.com/app/aimodel/am_01j06ytn18ejftedz6dyhz2b15`

* **Save Response To:** `local_news`

* **Functionality:** This step queries the Dappier API to retrieve real-time local news for the destination specified by the user. The response is saved in `local_news` for further use.

### **3. Collect Trip Start Date**

* **Action Type:** Get user input (text)

* **Prompt:** "When would you like to leave for `{{destination_city}}`?"

* **Variable:** `trip_start_date`

* **Functionality:** The agent prompts the user to specify their preferred departure date for the trip, storing it in the variable `trip_start_date`.

### **4. Collect Trip Duration**

* **Action Type:** Get user input (number)

* **Prompt:** "How long will this trip be? (in number of days)"

* **Variable:** `trip_duration`

* **Functionality:** The agent asks the user to enter the trip duration in days, which is then stored in `trip_duration`.

### **5. Fetch Real-Time Weather Forecast**

* **Action Type:** Make REST API call

* **Endpoint:** `https://api.dappier.com/app/aimodel/am_01j06ytn18ejftedz6dyhz2b15`

* **Save Response To:** `weather_forecast`

* **Functionality:** This step queries the Dappier API to retrieve a real-time weather forecast for the specified destination city. The weather forecast data is stored in `weather_forecast` for itinerary customization.

### **6. Generate Travel Itinerary with AI**

* **Action Type:** Invoke `gpt-4o-mini`

* **Prompt:**

```

You are a helpful dynamic travel planning assistant. Follow these steps:

**Display Local News:**

Format and display today's local news for the `{{destination_city}}`:

`{{local_news}}`

**Design the Itinerary:**

Generate a `{{trip_duration}}`-day travel itinerary for `{{destination_city}}`, tailored to the real-time weather forecast starting from `{{trip_start_date}}`. Use the below weather forecast information to create the customized itinerary:

`{{weather_forecast}}`

**Output:**

Present local news along with a detailed itinerary including dates, timing, activities, and weather forecast for each day.

```

* **Save Response To:** `assistant_response`

* **Functionality:** The AI processes user inputs (`destination_city`, `trip_start_date`, `trip_duration`, and `weather_forecast`) to generate a travel itinerary. It incorporates real-time local news and weather conditions to offer a fully customized trip plan.

### **7. Display Final Output**

* **Action Type:** Output result

* **Variable:** `assistant_response`

* **Format:** Auto-format under the heading **Output**

* **Functionality:** The generated itinerary along with local news is presented to the user in a well-structured format.

## Example Prompts

Here are some example prompts to help you get started with the Dynamic Travel Planner:

* **Destination Prompt:**

* "Where would you like to go?"

* Example: "Paris"

* **Travel Dates Prompt:**

* "When would you like to leave for \[destination]?"

* Example: "Coming weekend"

* **Trip Duration Prompt:**

* "How long will this trip be? (in number of days)"

* Example: 7

***

## Sample Output

## Today's Local News from Paris

Here’s the latest buzz from Paris:

* **AI Summit:** Paris is hosting an AI Summit, and there’s chatter about Elon Musk’s \$97.4 billion bid complicating things for OpenAI. 🤖💰

* **Legal News:** A French court has sentenced a Tunisian national to life in prison for the Nice church attack. ⚖️

* **Cycle Infrastructure Debate:** The recent death of Paul Varry has sparked discussions about the expansion of cycling infrastructure in the city. 🚴♂️

* **Crime Report:** An American woman is suspected of killing her newborn baby in Paris, raising serious concerns. 😢

* **Political Tensions:** French PM is threatening to review the migration pact with Algeria, especially regarding deportations. 🇫🇷

Stay tuned for more updates! 📰✨

***

## 7-Day Travel Itinerary for Paris

**Weather Forecast Overview:** A mix of clouds and sun with mild temperatures, perfect for exploring the city! 🌤️✨

***

### **Day 1: Saturday, March 2**

* **Weather:** High of 48°F (9°C), Low of 37°F (3°C), Cloudy with a chance of light rain.

* **Morning:** Arrive in Paris, check into your hotel.

* **Afternoon:** Visit the Louvre Museum (2 PM - 5 PM). Enjoy the art while staying indoors.

* **Evening:** Dinner at a cozy bistro in the Le Marais district (7 PM).

***

### **Day 2: Sunday, March 3**

* **Weather:** High of 50°F (10°C), Low of 38°F (3°C), Mostly cloudy, slight chance of rain.

* **Morning:** Breakfast at a local café (9 AM).

* **Midday:** Explore Notre-Dame Cathedral and the Île de la Cité (11 AM - 1 PM).

* **Afternoon:** Stroll through Jardin du Luxembourg (2 PM - 4 PM).

* **Evening:** Enjoy a Seine River cruise (6 PM).

***

### **Day 3: Monday, March 4**

* **Weather:** High of 52°F (11°C), Low of 39°F (4°C), Partly sunny.

* **Morning:** Visit Montmartre and the Sacré-Cœur Basilica (10 AM - 12 PM).

* **Afternoon:** Lunch at a café in Montmartre (12:30 PM).

* **Midday:** Explore the Moulin Rouge area and take photos (2 PM - 3 PM).

* **Evening:** Dinner at a restaurant with a view of the Eiffel Tower (7 PM).

***

### **Day 4: Tuesday, March 5**

* **Weather:** High of 53°F (12°C), Low of 40°F (5°C), Mostly sunny.

* **Morning:** Visit the Musée d'Orsay (10 AM - 12 PM).

* **Afternoon:** Lunch in the Saint-Germain-des-Prés area (12:30 PM).

* **Midday:** Walk along the Seine and visit Pont Alexandre III (2 PM - 3 PM).

* **Evening:** Attend a show at the Opéra Garnier (7 PM).

***

### **Day 5: Wednesday, March 6**

* **Weather:** High of 54°F (12°C), Low of 41°F (5°C), Partly cloudy.

* **Morning:** Explore the Champs-Élysées and visit the Arc de Triomphe (10 AM - 12 PM).

* **Afternoon:** Lunch at a café on the Champs-Élysées (12:30 PM).

* **Midday:** Visit the Palace of Versailles (2 PM - 5 PM).

* **Evening:** Return to Paris for dinner in the Latin Quarter (7 PM).

***

### **Day 6: Thursday, March 7**

* **Weather:** High of 55°F (13°C), Low of 42°F (6°C), Mostly sunny.

* **Morning:** Visit the Catacombs of Paris (10 AM - 12 PM).

* **Afternoon:** Lunch in the Montparnasse area (12:30 PM).

* **Midday:** Explore the Luxembourg Gardens (2 PM - 4 PM).

* **Evening:** Dinner at a rooftop restaurant with views of the city (7 PM).

***

### **Day 7: Friday, March 8**

* **Weather:** High of 56°F (13°C), Low of 43°F (6°C), Partly sunny.

* **Morning:** Last-minute shopping in the Le Marais district (10 AM - 12 PM).

* **Afternoon:** Lunch at a local bakery (12:30 PM).

* **Midday:** Visit the Centre Pompidou (2 PM - 4 PM).

* **Evening:** Farewell dinner at a classic French restaurant (7 PM).

***

Enjoy your trip to Paris! 🌍✈️

## Why Choose the Dynamic Travel Planner?

* **Real-Time Data:** Access up-to-date weather forecasts and today's local news for your destination.

* **Personalized Plans:** Get itineraries tailored to your preferences, travel dates, and real-time weather conditions.

* **Seamless Experience:** Simplify your travel planning with an intuitive and interactive assistant.

***

## Get Started Today

Ready to plan your next trip? Use the **Dynamic Travel Planner** to create a personalized, weather-optimized itinerary and stay informed with real-time updates for your destination. Start your journey now and experience travel planning like never before!

Happy travels! 🚀✈️

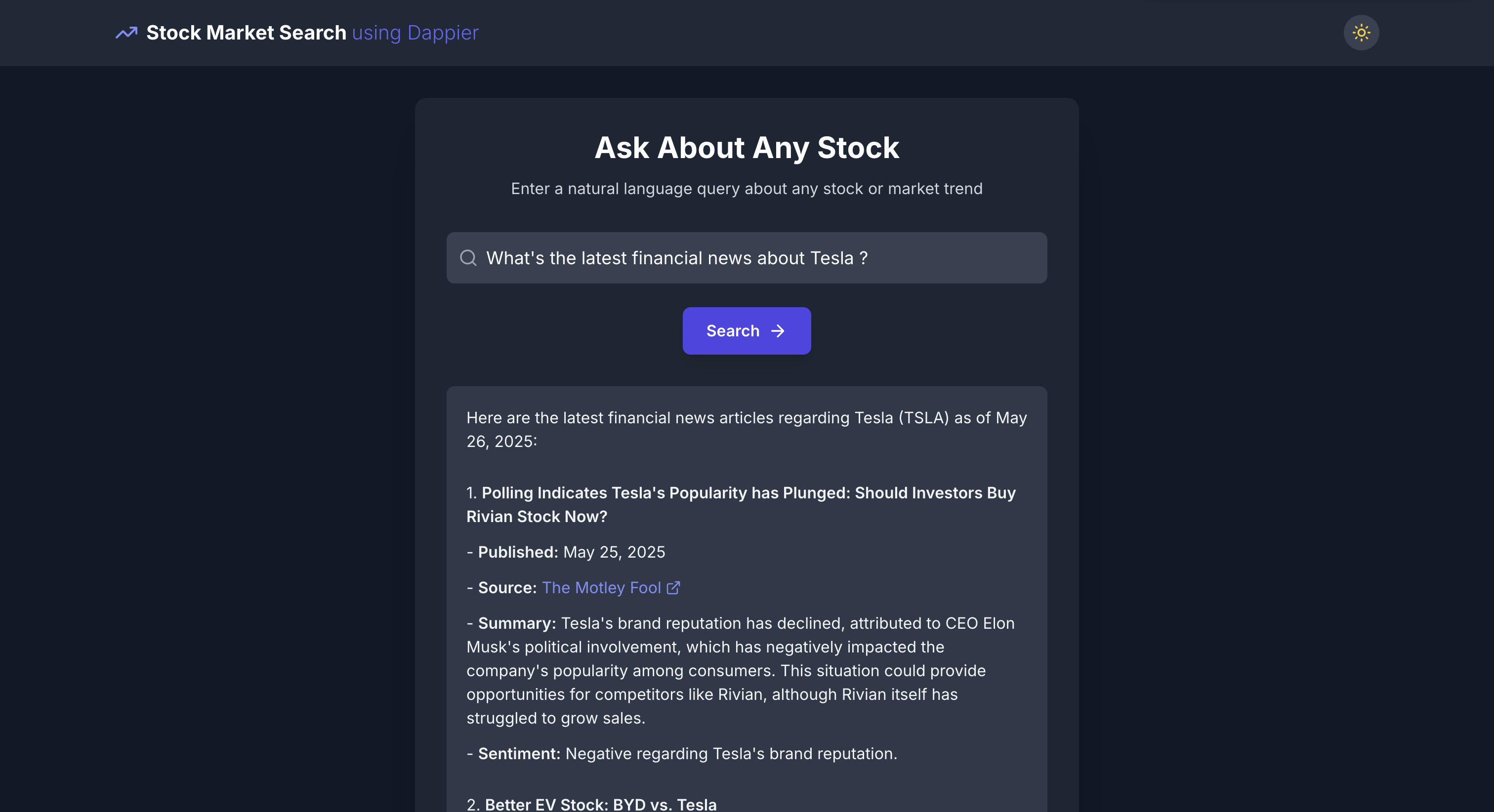

# ⚙️ Real Time Stock Market Analysis | Powered by Dappier and Agent.ai

Source: https://docs.dappier.com/cookbook/recipes/agent-ai-real-time-stock-market-analysis

{/* TODO: Add video once it's uploaded to Youtube. */}

[**Agent.ai**](http://Agent.ai) is a professional network and marketplace

for AI agents—and the people who love them. It allows users to discover,

connect with, and hire a variety of AI agents to perform useful tasks,

serving as a hub for agent-based collaborations and innovations.

[**Dappier**](https://dappier.com/developers/) is a platform that connects

LLMs and Agentic AI agents to real-time, rights-cleared data from trusted

sources, including web search, finance, and news. By providing enriched,

prompt-ready data, Dappier empowers AI with verified and up-to-date

information for a wide range of applications.

## Overview

The **Real Time Stock Analysis** is a custom AI-powered investment assistant built using Dappier's **Real Time Data** model. It is designed to create **data-driven trading strategies** and deliver **real-time financial news updates** for your chosen sector. Whether you're a seasoned investor or just starting out, this assistant ensures informed and optimized investment decisions by providing **customized plans** with stock recommendations, trend analysis, and market insights.

## Watch the Video Guide

If you prefer a visual walkthrough, check out the accompanying video guide below:

VIDEO

***

## Key Features

* **Data-Driven Strategies:** Get personalized trading strategies tailored to the latest market trends and financial news.

* **Real-Time Financial News:** Stay updated with the latest financial news and insights for your chosen sector.

* **Customized Plans:** Receive detailed stock recommendations, trend analysis, and market insights.

* **Email Delivery:** Get your trading strategy delivered directly to your inbox for easy access and reference.

***

## How to Use the Real Time Stock Analysis

Using the Real Time Stock Analysis is simple and intuitive. Follow the steps below to create your personalized investment plan:

1. **Start the Analysis:**\

Initiate the Real Time Stock Analysis by providing the sector you're interested in.

2. **Provide Your Email:**

* Enter your email address to receive the detailed trading strategy.

3. **Receive Your Plan:**\

The assistant will generate a **customized trading strategy** based on real-time financial news and market trends, and send it directly to your inbox.

***

## **SmartFlow Actions**

This agent is designed to provide users with a comprehensive stock market analysis, including financial news and market trends, to help them make informed investment decisions. Below are the SmartFlow actions configured for this agent:

### **1. Collect Sector Interest**

* **Action Type:** Get user input (text)

* **Prompt:** "Which sector are you interested in investing in?"

* **Variable:** `sector_name`

* **Functionality:** This step collects the user’s preferred investment sector and stores it in the variable `sector_name`.

### **2. Collect User Email Address**

* **Action Type:** Get user input (text)

* **Prompt:** "Please provide your email address to send a detailed analysis."

* **Variable:** `user_email`

* **Functionality:** The agent asks the user to enter their email address, which is stored in `user_email` for sending the final report.

### **3. Fetch Stock Market Trends**

* **Action Type:** Make REST API call

* **Endpoint:** `https://api.dappier.com/app/aimodel/am_01j06ytn18ejftedz6dyhz2b15`

* **Save Response To:** `stock_market_trends`

* **Functionality:** This step queries the Dappier API to retrieve real-time stock market trends, which are stored in `stock_market_trends` for further analysis.

### **4. Fetch Financial News**

* **Action Type:** Make REST API call

* **Endpoint:** `https://api.dappier.com/app/aimodel/am_01j06ytn18ejftedz6dyhz2b15`

* **Save Response To:** `financial_news`

* **Functionality:** This step queries the Dappier API to fetch the latest financial news, which is stored in `financial_news` for detailed stock analysis.

### **5. Generate Stock Analysis and Trading Strategy**

* **Action Type:** Invoke `o3-mini`

* **Prompt:**

```

You are an expert stock trader analyst who creates a detailed trading strategy and provides the top stocks to invest in based on financial news and market trends.

**Financial News:**

`{{financial_news}}`

**Stock Market Trends:**

`{{stock_market_trends}}`

**Output:**

The output should be formatted as HTML for emails.

```

* **Save Response To:** `assistant_response`

* **Functionality:** The AI processes real-time financial news and stock market trends to generate a structured trading strategy, including top stock recommendations. The response is formatted as an HTML email for easy readability.

### **6. Send Email with Stock Analysis**

* **Action Type:** Send Email

* **Recipient:** `user_email`

* **Email Content:** `assistant_response`

* **Functionality:** The formatted HTML stock market analysis is sent to the user’s provided email address.

### **7. Display Confirmation Message**

* **Action Type:** Output result

* **Message:** "Please check your inbox for the detailed trading strategy."

* **Format:** Auto-format under the heading **Email sent to - `user_email`**

* **Functionality:** This step confirms to the user that the stock analysis report has been successfully sent to their email.

## Example Prompts

Here are some example prompts to help you get started with the Real Time Stock Analysis:

* **Sector Prompt:**

* "Which sector are you interested in investing in?"

* Example: "Technology"

* **Email Prompt:**

* "Please provide your email address to send a detailed trading strategy."

* Example: "[john.doe@example.com](mailto:john.doe@example.com)."

***

## Sample Output

Once you provide the necessary details, the Real Time Stock Analysis will generate a trading strategy similar to the example below and send it to your email:

Email Sent to - [john.doe@example.com](mailto:john.doe@example.com)

**Subject:** Your Customized Trading Strategy for the Technology Sector

***

### **Tech Market Weekly Update**

**Date:** February 27, 2025

***

### **Market Overview & Trading Strategy**

The technology sector continues to outperform with significant momentum driven largely by advancements in artificial intelligence and robust earnings reports. The Morningstar US Technology Index has risen by **30.16%** over the past year, outperforming broader market gains of **23.92%**. This rapid growth, fueled by innovations from industry leaders, supports a continued bullish stance on tech stocks.

Our trading strategy for the coming period is to adopt a **diversified approach** within the tech sector with a focus on companies that are leading in AI, cloud, and other transformative technologies. Here’s a breakdown of the key aspects of our strategy:

* **Long-Term Investment:** Focus on holding core positions in market leaders with sustained growth in innovative technology.

* **Volatility Management:** Allocate a portion of the portfolio towards stocks that may experience short-term volatility (e.g., Tesla and Nvidia) but have strong fundamentals and growth potential.

* **Sector Diversification:** While tech remains a focus, continuously monitor market sentiment and diversify across sub-sectors like AI, cloud computing, and e-commerce within tech.

* **Risk Exposure:** Leverage earnings reports and product launch updates to adjust positions, keeping a keen eye on market trends and potential headwinds associated with high-growth stocks.

***

### **Top Tech Stocks to Watch**

#### **Nvidia (NVDA)**

* **Performance:** Up over **171%** on the year.

* **Strategy:** With its leadership in AI and gaming technology, Nvidia remains a top pick. Despite recent earnings volatility, its future product pipeline offers a strong growth narrative.

#### **Apple (AAPL)**

* **News:** Increasing investments in AI and machine learning alongside sustainability initiatives.

* **Strategy:** Apple’s reinforced commitment to innovation and massive market share (\~22% of the U.S. stock market) makes it ideal for long-term growth.

#### **Microsoft (MSFT)**

* **News:** Expanding AI integration into cloud services and Office products.

* **Strategy:** Consistent performance driven by cloud services and AI investments makes Microsoft a reliable cornerstone of any tech portfolio.

#### **Amazon (AMZN)**

* **News:** Robust growth from AWS and logistics expansion, alongside an enhanced AI-powered Alexa.

* **Strategy:** Amazon’s diversified revenue streams in e-commerce, cloud, and logistics provide a balanced growth opportunity.

#### **Meta Platforms (META)**

* **News:** Revitalizing user engagement and exploring new advertising and metaverse opportunities.

* **Strategy:** As Meta recovers and innovates within social media and digital advertising, it’s positioned for mid-to-long-term gains.

#### **Alphabet (GOOGL)**

* **News:** Dominance in online advertising along with aggressive AI initiatives.

* **Strategy:** Alphabet’s expansive ecosystem and service diversification continue to make it a compelling long-term buy.

#### **Tesla (TSLA)**

* **News:** Ongoing innovation in electric vehicles combined with energy solutions.

* **Strategy:** Tesla’s volatility offers trading opportunities for tactical positions, especially for investors with a higher risk tolerance.

***

### **Implementation & Next Steps**

Moving forward, consider the following action items:

1. **Monitor Earnings Reports:** Regularly review quarterly earnings to adjust exposure to issue-driven volatility.

2. **Stay Updated on Product Developments:** Focus on how tech giants are leveraging AI and innovative technologies to drive growth.

3. **Adjust Risk Management:** Balance high-growth opportunities with stable investments, ensuring a well-diversified portfolio.

4. **Sector & Market Trends:** Keep an eye on overall market sentiment and sector performance indices as indicators of broader economic conditions.

By aligning these strategies with recent financial news and robust stock market trends, investors can capitalize on both short-term opportunities and long-term growth potentials within the tech sector.

***

### **Disclaimer**

This email is for informational purposes only and should not be construed as financial advice. Always consult a professional advisor before making any investment decisions.

***

## Why Choose the Real Time Stock Analysis?

* **Real-Time Data:** Access up-to-date financial news and market trends for your chosen sector.

* **Personalized Plans:** Get trading strategies tailored to your investment goals and market conditions.

* **Seamless Experience:** Simplify your investment planning with an intuitive and interactive assistant.

***

## Get Started Today

Ready to optimize your investment strategy? Use the **Real Time Stock Analysis** to create a personalized, data-driven trading plan and stay informed with real-time financial updates for your chosen sector. Start your journey now and make smarter investment decisions!

For any questions or support, feel free to reach out to our team. Happy investing! 📈💼

# 🖇️ Dynamic Travel Planner with AgentStack, CrewAI & Dappier: Real-Time Itinerary Generation Using Multi-Agent AI

Source: https://docs.dappier.com/cookbook/recipes/agentstack-dynamic-travel-planner

This developer-focused cookbook demonstrates how to build and run a local multi-agent itinerary planner using **AgentStack**, **CrewAI**, and **Dappier**, powered by **OpenAI** and monitored via **AgentOps**. The setup guides you through generating structured travel itineraries based on real-time weather, live events, and hotel deals for a given city and date.

In this tutorial, you'll explore:

* **AgentStack**: A CLI-first framework for rapidly scaffolding AI agent workflows, generating agents, tasks, and tools with seamless CrewAI integration.

* **CrewAI**: A lightweight multi-agent orchestration engine, perfect for managing sequential or collaborative task execution between agents.

* **Dappier**: A platform that connects LLMs to real-time, rights-cleared data from trusted sources, specializing in domains like web search, finance, and travel. It delivers enriched, prompt-ready data, empowering AI with verified and up-to-date information for diverse applications.

* **OpenAI**: A leading provider of advanced AI models capable of natural language understanding, contextual reasoning, and content generation. It enables intelligent, human-like interactions and supports a wide range of applications across various domains.

* **AgentOps**: Track and analyze the running of CrewAI agents with full visibility into cost, token usage, execution traces, and replays.

This setup not only demonstrates a practical application of AI-driven travel planning but also provides a flexible framework that can be adapted to other real-world scenarios requiring real-time data integration from Dappier, multi-agent collaboration, and contextual reasoning.

## 📦 Project Initialization and Setup

To get started, we'll use the `agentstack` CLI to scaffold a fully functional multi-agent project. This command generates the base project structure, config files, virtual environment, and a ready-to-customize `crew.py` file with support for tools like Dappier and frameworks like CrewAI.

### Step 1: Initialize the Project

Run the following command in your terminal:

```bash

agentstack init dynamic_travel_planner

```

This will generate a folder named `dynamic_travel_planner` with the complete project structure.

### Step 2: Move Into the Project

```bash

cd dynamic_travel_planner

```

### Step 3: Activate the Virtual Environment

Activate the virtual environment created by `agentstack`:

```bash

source .venv/bin/activate

```

If you're using a Windows terminal, use:

```bash

.venv\Scripts\activate

```

> ✅ You now have a fully bootstrapped multi-agent AI project using AgentStack and CrewAI.

## 🔑 Setting Up API Keys

To enable real-time data access and AI reasoning, you’ll need API keys for the following services:

* **OpenAI** – for LLM-powered reasoning and summarization

* **Dappier** – for real-time stock market data and web search

* **AgentOps** – for run monitoring and debugging

These keys are stored in the `.env` file created during project initialization.

### Step 1: Open the `.env` file

Inside your project root, open `.env` and update it with your API keys:

```env

# OpenAI

OPENAI_API_KEY=your_openai_key_here

# Dappier

DAPPIER_API_KEY=your_dappier_key_here

# AgentOps (Optional but recommended)

AGENTOPS_API_KEY=your_agentops_key_here

```

You can get your keys here:

* 🔑 Get your OpenAI API Key [here](https://platform.openai.com/account/api-keys)

* 🔑 Get your Dappier API Key [here](https://platform.dappier.com/profile/api-keys) — free credits available

* 🔑 Get your AgentOps API Key [here](https://app.agentops.ai/signin) - enables run tracking and replay

> 🧪 Make sure to keep these keys private. Do not commit `.env` to version control.

## ⚙️ Installing Dependencies

After initializing the project and configuring your API keys, install the required dependencies to ensure everything runs smoothly.

The key packages used in this project are:

* [`crewai`](https://pypi.org/project/crewai/) – Multi-agent execution and orchestration

* [`agentstack`](https://pypi.org/project/agentstack/) – CLI toolchain for generating agents, tasks, and crews

* [`dappier`](https://pypi.org/project/dappier/) – Real-time data access layer for tools like stock search and news

* [`openai`](https://pypi.org/project/openai/) – LLM access to GPT-4o and other OpenAI models (automatically included)

### Step 1: Sync All Dependencies from `pyproject.toml`

If you're using the pre-generated project setup from `agentstack init`, run:

```bash

uv lock

uv sync

```

* `uv lock` will generate the `uv.lock` file based on your `pyproject.toml`

* `uv sync` will install all dependencies into the virtual environment

### Step 2: Add or Upgrade Individual Packages

You need to upgrade packages manually, use:

```bash

uv remove "agentstack[crewai]"

uv add crewai

uv add agentstack

```

> ✅ You now have all the required dependencies installed locally and locked for reproducible agent execution.

## 👤 Creating Agents

Now that your environment is ready, let’s generate the agents that will power the travel planning workflow. These agents are created using the AgentStack CLI and are defined declaratively in `agents.yaml`.

### Step 1: Generate the Agents

You’ll use the following command to scaffold each agent:

```bash

agentstack generate agent

```

You’ll be prompted to enter the agent's name, role, goal, backstory, and model. Repeat this step for each agent in your system.

Here are the agents created for this project:

#### 🗺️ `travel_planner`

```bash

agentstack g a travel_planner \

--role="A real-time travel assistant that collects weather data, events, and hotel information using the Dappier real-time web search tool." \

--goal="To generate personalized multi-day itineraries based on current weather, events, and travel deals." \

--backstory="Trained to support personalized trip planning, this agent uses Dappier's real-time search to retrieve local weather, events, and hotel offers for a target city and date. It creates structured itineraries tailored to weather conditions and event availability." \

--llm=openai/gpt-4o

```

#### 📋 `itinerary_reporter`

```bash

agentstack g a itinerary_reporter \

--role="A travel itinerary formatter that compiles data into a complete multi-day markdown itinerary." \

--goal="To generate a markdown-formatted travel itinerary based on outputs from the travel planner." \

--backstory="This agent specializes in transforming weather and event-based trip data into user-friendly markdown plans. It includes timing, local tips, daily highlights, and weather context for each activity." \

--llm=openai/gpt-4o

```

## ✅ Creating Tasks

Each task defines a specific responsibility to be performed by one of the agents. In this project, tasks are tightly scoped and executed sequentially by CrewAI, allowing agents to collaborate and generate a complete travel itinerary using real-time data.

Tasks are defined declaratively in `tasks.yaml` and are created using the AgentStack CLI.

### Step 1: Generate Tasks Using the CLI

Run the following command to create a new task:

```bash

agentstack generate task

```

You’ll be prompted to provide:

* `task_name` (required)

* `--description` – a detailed instruction including `{city}`, `{travel_date}`, `{num_days}`, and `{timestamp}`

* `--expected_output` – structured data, tables, or markdown expected from the task

* `--agent` – the agent responsible for this task

Here are the tasks used in this project:

#### 📅 `determine_travel_period`

```bash

agentstack g t determine_travel_period \

--description="As of {timestamp}, use real-time web search to determine the current date and calculate the travel period based on the user's selected travel date: {travel_date} and trip length: {num_days} days in {city}." \

--expected_output="A structured summary showing current date, travel start and end dates for the selected trip." \

--agent=travel_planner

```

#### 🌤 `fetch_weather_forecast`

```bash

agentstack g t fetch_weather_forecast \

--description="As of {timestamp}, use real-time web search to retrieve the multi-day weather forecast for {city} during the selected travel period starting on {travel_date} for {num_days} days." \

--expected_output="Daily weather forecast for {city} including temperature, conditions, and any alerts during the trip." \

--agent=travel_planner

```

#### 🎉 `fetch_live_events`

```bash

agentstack g t fetch_live_events \

--description="As of {timestamp}, use real-time web search to find live events happening in {city} during the travel period starting on {travel_date} for {num_days} days. Include concerts, festivals, sports, and cultural events." \

--expected_output="A list of events with names, dates, descriptions, and links where applicable for each day of the trip." \

--agent=travel_planner

```

#### 🏨 `fetch_hotel_deals`

```bash

agentstack g t fetch_hotel_deals \

--description="As of {timestamp}, use real-time web search to find hotel deals in {city} during the travel period starting on {travel_date} for {num_days} days. Include price estimates and booking links." \

--expected_output="Top hotel deals with prices, availability, and booking URLs for the selected city and dates." \

--agent=travel_planner

```

#### 📆 `generate_travel_itinerary`

```bash

agentstack g t generate_travel_itinerary \

--description="As of {timestamp}, compile a detailed {num_days}-day travel itinerary for {city} by combining the outputs of all previous tasks: travel period, weather forecast, live events, and hotel deals. Include day-wise plans with weather-based suggestions, timing, activities, event highlights, hotel info, and booking links." \

--expected_output="A markdown-formatted itinerary for {city} covering {num_days} days, including: daily schedule, activity suggestions, weather, events, hotel info, and travel tips." \

--agent=itinerary_reporter

```

> ⚠️ The following two fields must be added manually to the `generate_travel_itinerary` task inside `tasks.yaml`, as they are not currently supported via the CLI:

```yaml

output_file: reports/{city}_travel_itinerary.md

create_directory: true

```

> 🪄 All of the above tasks will be executed in sequence using CrewAI when you run the crew.

## 🛠️ Adding Tools to Agents

Tools enable agents to interact with external services like Dappier. In this project, Dappier provides real-time access to weather data, live events, and hotel deals, powering all the data-gathering tasks.

### Add Dappier Tools to the Project

Instead of manually assigning tools one-by-one, you can add the full Dappier toolkit using:

```bash

agentstack tools add dappier

```

This:

* Adds all Dappier tools to the AgentStack project

* Automatically installs the `dappier` package by updating your `pyproject.toml` file

* Registers the tools under `agentstack.tools["dappier"]` for in-code access

## 📝 Providing Inputs & Setting Timestamps

Before running the crew, you need to define the runtime inputs that agents and tasks will use. The AgentStack project already includes an `inputs.yaml` file, which is used to inject these inputs when the crew is executed.

In this project, we use three dynamic inputs:

* `city`: The destination city (e.g., "paris")

* `travel_date`: The planned start date of the trip (e.g., "tomorrow")

* `num_days`: The number of days for the trip (e.g., 5)

* `timestamp`: The current UTC time, injected automatically via code

### Step 1: Update `inputs.yaml`

Open the pre-generated `inputs.yaml` file and set the travel details:

```yaml

city: paris

travel_date: tomorrow

num_days: 5

```

You can modify the values to plan your own custom itinerary.

### Step 2: Inject a Real-Time Timestamp in `main.py`

To provide the current timestamp at execution time, update the `run()` function in `main.py`:

```python

from datetime import datetime, timezone

def run():

"""

Run the agent.

"""

inputs = agentstack.get_inputs()

inputs["timestamp"] = datetime.now(timezone.utc).isoformat()

instance.kickoff(inputs=inputs)

```

This will:

* Dynamically inject the current UTC timestamp into the input dictionary

* Allow all tasks referencing `{timestamp}` in `tasks.yaml` to use consistent timing context

> ⏱️ Timestamped input ensures your travel itinerary is grounded in real-time context.

## 🚀 Running the Crew

Once your agents, tasks, tools, and inputs are all set up, you're ready to run the multi-agent crew. The crew will execute each task in sequence, collaborating to generate a fully structured itinerary using real-time data.

### Step 1: Run the AgentStack Project

To start the crew execution, run the following command from the project root:

```bash

agentstack run

```

This command:

* Loads your agents from `agents.yaml`

* Loads your tasks from `tasks.yaml`

* Injects inputs from `inputs.yaml` (including the runtime `timestamp`)

* Executes all tasks sequentially via CrewAI

* Stores the final output (e.g., markdown itinerary) in the path defined in `tasks.yaml`

> ✅ You should see terminal output as each agent completes its assigned task.

### Step 2: Debug with `--debug` Mode (Optional)

For detailed execution traces, run with the debug flag:

```bash

agentstack run --debug

```

This enables verbose logging, including:

* Which agent is running

* Which tool is being used

* Real-time function call results

* Intermediate outputs for each task

> 🧪 Use debug mode to troubleshoot tool usage or model behavior during each step.

### Step 3: View the Final Output

After the crew finishes execution, you’ll find the generated itinerary at:

```

reports/paris_travel_itinerary.md

```

```markdown

# Paris 5-Day Travel Itinerary

**Travel Dates:** May 2, 2025 - May 6, 2025

**Hotel:** Choose from options like Hôtel Malte - Astotel, Hôtel Maison Mère, or others available with deals. Ensure to book through platforms like Trivago or Expedia for competitive prices and free cancellation offers.

---

## Day 1: May 2, 2025 - Arrival and Exploration

- **Weather:** Sunny, high around 83°F (28°C)

- **Morning:**

- **Check-in to Hotel** - Settle into your chosen accommodation from the recommended list. Freshen up and prepare for a day of exploration in beautiful weather.

- **Afternoon:**

- **St-Germain Jazz Festival** - Dive into the Parisian music scene with jazz performances across the city. Make sure to check [Event Link] for schedules and venues.

- **Lunch at a Café** - Enjoy dining al fresco with delicious French cuisine nearby.

- **Evening:**

- **Taste of Paris Festival** - Immerse yourself in a gastronomic paradise at the Grand Palais. [Event Link] offers details on participating chefs and menu highlights.

- **Local Tip:** Use public transport for convenient travel across the city; a Navigo card can be beneficial for your stay.

---

## Day 2: May 3, 2025 - Historical Charms and Entertainment

- **Weather:** Partly cloudy, high around 80°F (27°C)

- **Morning:**

- **Visit the Louvre Museum** - Beat the crowds with an early visit. Don’t miss the Mona Lisa and other world-renowned artworks.

- **Afternoon:**

- **Roland Garros (French Open)** - Head to the courts for some thrilling tennis matches. Pre-book your tickets via the [Event Link] to secure a spot.

- **Evening:**

- **Candlelight Concerts at Sainte-Chapelle** - Revel in an intimate classical music performance in a mesmerizing Gothic setting. [Event Link] for details.

- **Highlight:** Capture sunset views from the Pont Alexandre III.

---

## Day 3: May 4, 2025 - Art and Culture Extravaganza

- **Weather:** Mostly sunny, high around 81°F (27°C)

- **Morning:**

- **Street Art Fair in Montmartre** - Discover vibrant murals and meet local artists. Be sure to visit iconic spots like Sacré-Cœur.

- **Afternoon:**

- **European Museums Night** - Take advantage of free and extended openings at major museums, including special events at the Louvre. Check [Event Link] for participating venues.

- **Evening:**

- **Dinner at Le Consulat in Montmartre** - Experience traditional French bistro vibes with authentic dishes.

- **Travel Tip:** Wear comfortable shoes for walking to fully absorb Montmartre’s artistic ambiance.

---

## Day 4: May 5, 2025 - Adventure and Elegance

- **Weather:** Chance of rain, high around 78°F (26°C)

- **Morning:**

- **Great Paris Steeplechase** - Enjoy the thrill of horse racing; rain gear might be handy. [Event Link] for detailed info and tickets.

- **Afternoon:**

- **Cosplay Festival** - Celebrate pop culture at Paris Expo Porte de Versailles, a must for anime lovers. Check [Event Link] ahead for workshops and entry details.

- **Evening:**

- **Dinner in Saint-Germain-des-Prés** - Dine at a cozy eatery exploring culinary delights from modest brasseries to refined Parisian cuisines.

- **Pro Tip:** Be prepared for intermittent showers with a compact umbrella.

---

## Day 5: May 6, 2025 - Farewell Paris

- **Weather:** Cloudy, high around 76°F (24°C)

- **Morning:**

- **Leisurely Seine River Cruise** - Relax on a boat tour taking in iconic sites like the Eiffel Tower and Notre-Dame.

- **Afternoon:**

- **Final Stroll and Shopping** - Explore Le Marais district for unique boutiques and last-minute souvenirs.

- **Evening:**

- **Candlelight Concerts** - Cap off your trip with a final magical evening of classical music. Tickets and venue details on [Event Link].

- **Farewell Tip:** Dedicate time for a memorable café stop to enjoy a final indulgent Parisian pastry.

---

**Enjoy your unforgettable journey in Paris!** Make sure to review all event schedules and book your hotel early to embrace Paris at its finest. Bon voyage! 🌟

```

You can open this file in any markdown viewer or commit it to your workspace.

> 📄 The final itinerary includes weather, events, hotel deals, and daily plans — all compiled in real time.

## 📊 Viewing Agent Run Summary in AgentOps

This project integrates with [AgentOps](https://app.agentops.ai/) to provide full visibility into agent execution, tool calls, and token usage. By setting the `AGENTOPS_API_KEY` in your `.env` file, all runs are automatically tracked.

Below is a sample AgentOps run for this project:

* **Duration**: 04m 12s

* **Cost**: \$0.0718500

* **LLM Calls**: 29

* **Tool Calls**: 4

* **Tokens**: 60,006

You can view the complete execution trace, including tool calls, function arguments, and model responses in AgentOps.

```

🖇 AgentOps: Replay: https://app.agentops.ai/sessions?trace_id=234cfee99d72a45edf8d4fbd3d825461

```

> Note: The AgentOps link shown above is tied to the AgentOps account. To access your own replay, you must run the crew using your personal `AGENTOPS_API_KEY`.

## 🌟 Highlights

This cookbook has guided you through setting up and running a local travel planning workflow using AgentStack, CrewAI, and Dappier. You created a structured multi-agent execution that generates real-time itineraries based on weather, events, and hotel data.

Key tools utilized in this cookbook include:

* **AgentStack**: A CLI framework to scaffold agents, tasks, and tools with support for local execution and configuration.

* **CrewAI**: A lightweight multi-agent framework that executes agents in sequential or collaborative workflows.

* **OpenAI**: A leading provider of AI models capable of language understanding, summarization, and reasoning, used to power each agent’s intelligence.

* **Dappier**: A platform that connects agents to real-time, rights-cleared data from trusted sources, specializing in domains like web search, finance, and travel.

* **AgentOps**: A tool to track and analyze agent runs, including replay, cost breakdowns, and prompt histories.

This comprehensive setup allows you to adapt and expand the example for various scenarios requiring advanced data retrieval, multi-agent collaboration, and real-time context integration.

# 🖇️ Stock Market Research with AgentStack, CrewAI & Dappier: Real-Time Investment Intelligence using Multi-Agent AI

Source: https://docs.dappier.com/cookbook/recipes/agentstack-stock-market-researcher

This developer-focused cookbook demonstrates how to build and run a local multi-agent investment research system using **AgentStack**, **CrewAI**, and **Dappier**, powered by **OpenAI** and monitored via **AgentOps**. The setup guides you through generating structured investment reports from real-time financial and company data.

In this tutorial, you'll explore:

* **AgentStack**: A CLI-first framework for rapidly scaffolding AI agent workflows, generating agents, tasks, and tools with seamless CrewAI integration.

* **CrewAI**: A lightweight multi-agent orchestration engine, perfect for managing sequential or collaborative task execution between agents.

* **Dappier**: A platform that connects LLMs to real-time, rights-cleared data sources like stock market data, web search, and financial news.

* **OpenAI**: A powerful language model provider, enabling natural language understanding, real-time reasoning, and content generation.

* **AgentOps**: A monitoring tool to track, replay, and analyze agent runs with detailed visibility into agent reasoning and tool use.

This guide walks you through creating a **local, production-grade AI research agent system** to analyze companies like Amazon, generate investment snapshots, and compile markdown-formatted financial reports — all grounded in **real-time market data** from Dappier.

> 🛠️ All tasks and agents in this cookbook are generated using `agentstack` commands and executed in a Python project on your local machine. No notebooks, no server setup required.

## 📦 Project Initialization and Setup

To get started, we'll use the `agentstack` CLI to scaffold a fully functional multi-agent project. This command generates the base project structure, config files, virtual environment, and a ready-to-customize `crew.py` file with support for tools like Dappier and frameworks like CrewAI.

### Step 1: Initialize the Project

Run the following command in your terminal:

```bash

agentstack init stock_market_research

```

This will generate a folder named `stock_market_research` with the complete project structure.

### Step 2: Move Into the Project

```bash

cd stock_market_research

```

### Step 3: Activate the Virtual Environment

Activate the virtual environment created by `agentstack`:

```bash

source .venv/bin/activate

```

If you're using a Windows terminal, use:

```bash

.venv\Scripts\activate

```

> ✅ You now have a fully bootstrapped multi-agent AI project using AgentStack and CrewAI.

## 🔑 Setting Up API Keys

To enable real-time data access and AI reasoning, you’ll need API keys for the following services:

* **OpenAI** – for LLM-powered reasoning and summarization

* **Dappier** – for real-time stock market data and web search

* **AgentOps** – for run monitoring and debugging

These keys are stored in the `.env` file created during project initialization.

### Step 1: Open the `.env` file

Inside your project root, open `.env` and update it with your API keys:

```env

# OpenAI

OPENAI_API_KEY=your_openai_key_here

# Dappier

DAPPIER_API_KEY=your_dappier_key_here

# AgentOps (Optional but recommended)

AGENTOPS_API_KEY=your_agentops_key_here

```

You can get your keys here:

* 🔑 Get your OpenAI API Key [here](https://platform.openai.com/account/api-keys)

* 🔑 Get your Dappier API Key [here](https://platform.dappier.com/profile/api-keys) — free credits available

* 🔑 Get your AgentOps API Key [here](https://app.agentops.ai/signin) - enables run tracking and replay

> 🧪 Make sure to keep these keys private. Do not commit `.env` to version control.

## ⚙️ Installing Dependencies

After initializing the project and configuring your API keys, install the required dependencies to ensure everything runs smoothly.

The key packages used in this project are:

* [`crewai`](https://pypi.org/project/crewai/) – Multi-agent execution and orchestration

* [`agentstack`](https://pypi.org/project/agentstack/) – CLI toolchain for generating agents, tasks, and crews

* [`dappier`](https://pypi.org/project/dappier/) – Real-time data access layer for tools like stock search and news

* [`openai`](https://pypi.org/project/openai/) – LLM access to GPT-4o and other OpenAI models (automatically included)

### Step 1: Sync All Dependencies from `pyproject.toml`

If you're using the pre-generated project setup from `agentstack init`, run:

```bash

uv lock

uv sync

```

* `uv lock` will generate the `uv.lock` file based on your `pyproject.toml`

* `uv sync` will install all dependencies into the virtual environment

### Step 2: Add or Upgrade Individual Packages

You need to upgrade packages manually, use:

```bash

uv remove "agentstack[crewai]"

uv add crewai

uv add agentstack

```

> ✅ You now have all the required dependencies installed locally and locked for reproducible agent execution.

## 👤 Creating Agents

Now that your environment is ready, let’s generate the agents that will power the stock market research workflow. These agents are created using the AgentStack CLI and are defined declaratively in `agents.yaml`.

### Step 1: Generate the Agents

You’ll use the following command to scaffold each agent:

```bash

agentstack generate agent

```

You’ll be prompted to enter the agent's name, role, goal, backstory, and model. Repeat this step for each agent in your system.

Here are the agents created for this project:

#### 🧠 `web_researcher`

```bash

agentstack g a web_researcher \

--role="A company research analyst that collects structured business data, financials, and competitive insights from the Dappier real-time web search." \

--goal="To compile detailed company profiles using real-time data, covering company overview, financial performance, and peer benchmarking." \

--backstory="Trained to support investment research workflows, this agent uses Dappier’s real-time web search to gather trustworthy and current business information. It builds company snapshots with industry, CEO, market cap, and financial metrics like revenue and net income." \

--llm=openai/gpt-4o

```

#### 📊 `stock_insights_analyst`

```bash

agentstack g a stock_insights_analyst \

--role="A stock market intelligence analyst that retrieves real-time financial data and curated news using the Dappier stock market data search." \

--goal="To deliver up-to-date stock snapshots, performance metrics, and categorized financial news for informed investment analysis." \

--backstory="Trained to analyze real-time financial markets using Dappier’s stock market data tool, this agent specializes in stock-specific queries. It provides live insights into stock price movements, valuation ratios, earnings, and sentiment-tagged news from reliable financial feeds like Polygon.io." \

--llm=openai/gpt-4o

```

#### 📝 `report_analyst`

```bash

agentstack g a report_analyst \

--role="A financial report analyst that consolidates real-time stock and company insights into a comprehensive markdown report." \

--goal="To generate an investor-facing, markdown-formatted summary combining company profile, financials, benchmarking, stock performance, and real-time news with actionable insights." \

--backstory="Specialized in synthesizing structured data retrieved by other research agents, this agent produces detailed markdown reports that explain what's happening with a given stock ticker, why it matters, and what the short-term outlook may be." \

--llm=openai/gpt-4o

```

Once generated, AgentStack automatically adds these to your `agents.yaml` file.

> 👥 These agents will work together to build a real-time stock market report using data from Dappier and OpenAI reasoning.

## ✅ Creating Tasks

Each task defines a specific responsibility to be performed by one of the agents. In this project, tasks are tightly scoped and executed sequentially by CrewAI, allowing agents to collaborate and generate a full investment report.

Tasks are defined declaratively in `tasks.yaml` and are created using the AgentStack CLI.

### Step 1: Generate Tasks Using the CLI

Run the following command to create a new task:

```bash

agentstack generate task

```

You’ll be prompted to provide:

* `task_name` (required)

* `--description` – a detailed instruction including `{company_name}` and `{timestamp}`

* `--expected_output` – structured data, tables, or markdown expected from the task

* `--agent` – the agent responsible for this task

Here are the tasks used in this project:

#### 🏢 `company_overview`

```bash

agentstack g t company_overview \

--description="As of {timestamp}, fetch the company overview for {company_name} using real-time web search with the timestamp. Include company profile, industry, sector, CEO, headquarters location, employee count, market capitalization and stock ticker symbol." \

--expected_output="A structured company profile including: Industry, Sector, CEO, HQ Location, Employees, Market Cap, and Ticker Symbol." \

--agent=web_researcher

```

#### 📉 `financials_performance`

```bash

agentstack g t financials_performance \

--description="As of {timestamp}, use real-time web search with the timestamp to extract financial performance data for {company_name}, including Revenue (TTM), Net Income (TTM), Year-over-Year revenue growth, gross margin, and recent quarterly trends. Include any earnings trends or management commentary available." \

--expected_output="A summary of financial metrics for {company_name}: Revenue (TTM), Net Income (TTM), YoY Growth, Gross Margin, and Quarterly Trends." \

--agent=web_researcher

```

#### 🆚 `competitive_benchmarking`

```bash

agentstack g t competitive_benchmarking \

--description="As of {timestamp}, perform real-time web search with the timestamp to identify 3-5 peer companies in the same sector as {company_name}. Extract and compare key metrics such as P/E ratio, revenue,stock price, and market cap. Highlight any standout metrics where {company_name} outperforms or underperforms." \

--expected_output="A table comparing {company_name} and 3–5 peers on P/E, revenue, stock price, and market cap with highlights." \

--agent=web_researcher

```

#### 💹 `real_time_stock_snapshot`

```bash

agentstack g t real_time_stock_snapshot \

--description="As of {timestamp}, convert {company_name} to its stock ticker symbol and retrieve a real-time stock snapshot using Dappier’s stock market data tool with the timestamp. Include current price with % daily change, volume, 52-week high/low, P/E ratio, EPS, dividend yield, and chart data for 1D, 5D, 1M, YTD, and 1Y in the query." \

--expected_output="Structured snapshot of {company_name}: Price, Change %, Volume, 52W High/Low, P/E, EPS, Dividend, and Charts." \

--agent=stock_insights_analyst

```

#### 📰 `news_and_sentiment`

```bash

agentstack g t news_and_sentiment \

--description="As of {timestamp}, compile a comprehensive, markdown-formatted investment report for {company_name} by synthesizing the outputs of all prior tasks: company overview, financial performance, competitive benchmarking, real-time stock snapshot, and categorized financial news. Use the timestamp in all queries. Include a concise AI-generated company summary, structured data tables, sentiment-tagged news, and a narrative insight section." \

--expected_output="A markdown-formatted investment report containing:

1. Quick AI summary of {company_name} (e.g., "Apple is a global tech leader…")

2. Structured company profile: Industry, Sector, CEO, HQ, Employees, Market Cap

3. Financial performance metrics: Revenue (TTM), Net Income (TTM), YoY Growth, Gross Margin, Trends

4. Competitive benchmarking table: P/E, Revenue, Stock Price, Market Cap vs. 3–5 peers

5. Real-time stock snapshot: Price, % Change, Volume, 52W High/Low, P/E, EPS, Dividend, charts

6. Categorized news: Earnings, Analyst Ratings, Market Moves, Partnerships, Legal/Regulatory (with sentiment tags)

7. Final 3-part insight section:

- What's going on with {company_name}

- Why it matters

- Outlook (clearly marked as not financial advice)" \

--agent=stock_insights_analyst

```

#### 📄 `generate_investment_report`

```bash

agentstack g t generate_investment_report \

--description="As of {timestamp}, compile a markdown-formatted investment report for {company_name} using all prior task outputs. Include a summary, structured profile, financial metrics, peer comparisons, charts, news, and a 3-part insight section." \

--expected_output="A markdown report with company overview, financials, benchmarking, stock snapshot, categorized news, and AI-generated outlook." \

--agent=report_analyst

```

> ⚠️ The following two fields must be added manually to the `generate_investment_report` task inside `tasks.yaml`, as they are not currently supported via the CLI:

```yaml

output_file: reports/{company_name}_investment_report.md

create_directory: true

```

> 🪄 All of the above tasks will be executed in sequence using CrewAI when you run the crew.

## 🛠️ Adding Tools to Agents

Tools enable agents to interact with external services like Dappier. In this project, Dappier provides real-time access to financial data and web search, powering all the data-gathering tasks.

### Step 1: Add Dappier Tools to the Project

Instead of manually assigning tools one-by-one, you can add all Dappier tools at once using:

```bash

agentstack tools add dappier

```

This will register the full Dappier toolset to the project and make it available through the `agentstack.tools["dappier"]` registry.

### Step 2: Select Specific Tools Per Agent in Code

Instead of assigning tools via CLI, agents in this project filter and attach only the tools they need using a custom helper function in `crew.py`:

```python

def get_dappier_tool(tool_name: str):

for tool in agentstack.tools["dappier"]:

if tool.name == tool_name:

return tool

return None

```

Then each agent uses this function to assign a single tool:

#### 🧠 web\_researcher

```python

tools = [get_dappier_tool("real_time_web_search")]

```

#### 📊 stock\_insights\_analyst

```python

tools = [get_dappier_tool("stock_market_data_search")]

```

> ⚙️ This approach ensures that each agent only receives the exact tool it needs, while keeping tool registration centralized and clean.

## 📝 Providing Inputs & Setting Timestamps

Before running the crew, you need to define the runtime inputs that agents and tasks will use. The AgentStack project already includes an `inputs.yaml` file, which is used to inject these inputs when the crew is executed.

In this project, we use two dynamic inputs:

* `company_name`: The company to analyze (e.g., "tesla")

* `timestamp`: The current UTC time, injected automatically via code

### Step 1: Update `inputs.yaml`

Open the pre-generated `inputs.yaml` file and set the target company:

```yaml

company_name: tesla

```

You can modify `"tesla"` to any other publicly traded company.

### Step 2: Inject a Real-Time Timestamp in `main.py`

To provide the current timestamp at execution time, update the `run()` function in `main.py`:

```python

from datetime import datetime, timezone

def run():

"""

Run the agent.

"""

inputs = agentstack.get_inputs()

inputs["timestamp"] = datetime.now(timezone.utc).isoformat()

instance.kickoff(inputs=inputs)

```

This will:

* Dynamically inject the current UTC timestamp into the input dictionary

* Allow all tasks referencing `{timestamp}` in `tasks.yaml` to use consistent timing context

> ⏱️ Timestamped input ensures your reports are anchored to the moment of execution.

## 🚀 Running the Crew

Once your agents, tasks, tools, and inputs are all set up, you're ready to run the multi-agent crew. The crew will execute each task in sequence, collaborating to generate a fully structured investment research report using real-time data.

### Step 1: Run the AgentStack Project

To start the crew execution, run the following command from the project root:

```bash

agentstack run

```

This command:

* Loads your agents from `agents.yaml`

* Loads your tasks from `tasks.yaml`

* Injects inputs from `inputs.yaml` (including the runtime `timestamp`)

* Executes all tasks sequentially via CrewAI

* Stores the final output (e.g., markdown report) in the path defined in `tasks.yaml`

> ✅ You should see terminal output as each agent completes its assigned task.

### Step 2: Debug with `--debug` Mode (Optional)

For detailed execution traces, run with the debug flag:

```bash

agentstack run --debug

```

This enables verbose logging, including:

* Which agent is running

* Which tool is being used

* Real-time function call results

* Intermediate outputs for each task

> 🧪 Use debug mode to troubleshoot tool usage or model behavior during each step.

### Step 3: View the Final Output